VI-HPS Tuning Workshop 2024

Social Event @ Gasthof Neuwirt Garching © LRZ

VI-HPS Webpage

See https://www.vi-hps.org/training/tws/tw45.html

Agenda

See https://app1.edoobox.com/en/LRZ/Onsite%20Courses/Course.ed.3a985fcbded9_8367752566

Lecturers

- David Böhme (LLNL) remote

- Hugo Bolloré (UVSQ)

- Alexander Geiß (TUD)

- Germán Llort (BSC)

- Michele Martone (LRZ)

- Lau Mercadal (BSC)

- Emmanuel Oseret (UVSQ)

- Amir Raoofy (LRZ)

- Jan André Reuter (JSC) remote

- Rudy Shand (Linaro)

- Sameer Shende (University of Oregon)

- Cédric Valensi (UVSQ)

- Josef Weidendorfer (LRZ)

- Brian Wylie (JSC) remote

- Ilya Zhukhov (JSC)

Organisers

- Cédric Valensi (UVSQ)

- Josef Weidendorfer (LRZ)

- Volker Weinberg (LRZ)

Guided Tour

Tuesday, 11.06.2024, 17:30 - 18:30 CEST

The guided tour provides an overview of the LRZ Compute Cubes including our flagship supercomputer SuperMUC-NG, the network infrastructure for the Munich Scientific Network, one of the first quantum computers and LRZ's huge storage facilities and data libraries.

The tour is on your own risk. Please make sure you have a passport / photo ID with you. The tour will last approx. 1 hour.

Social Event (self-payment)

Wednesday, 12.06.2024, 18:30 CEST - open end

The social event will take place in a typical Bavarian restaurant in Garching near Munich:

Gasthof Neuwirt

Münchener Str.10

85748 Garching

How to get there:

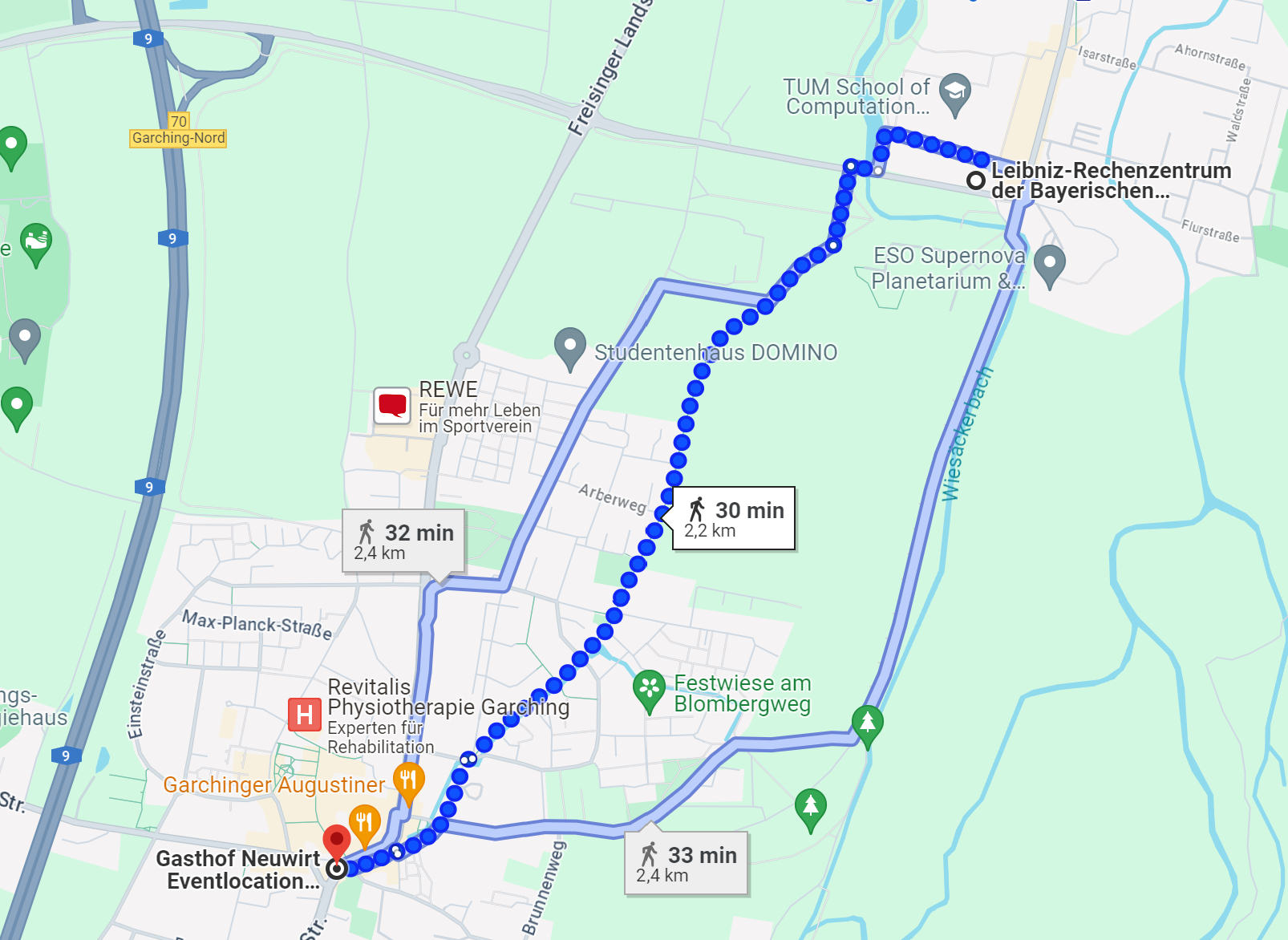

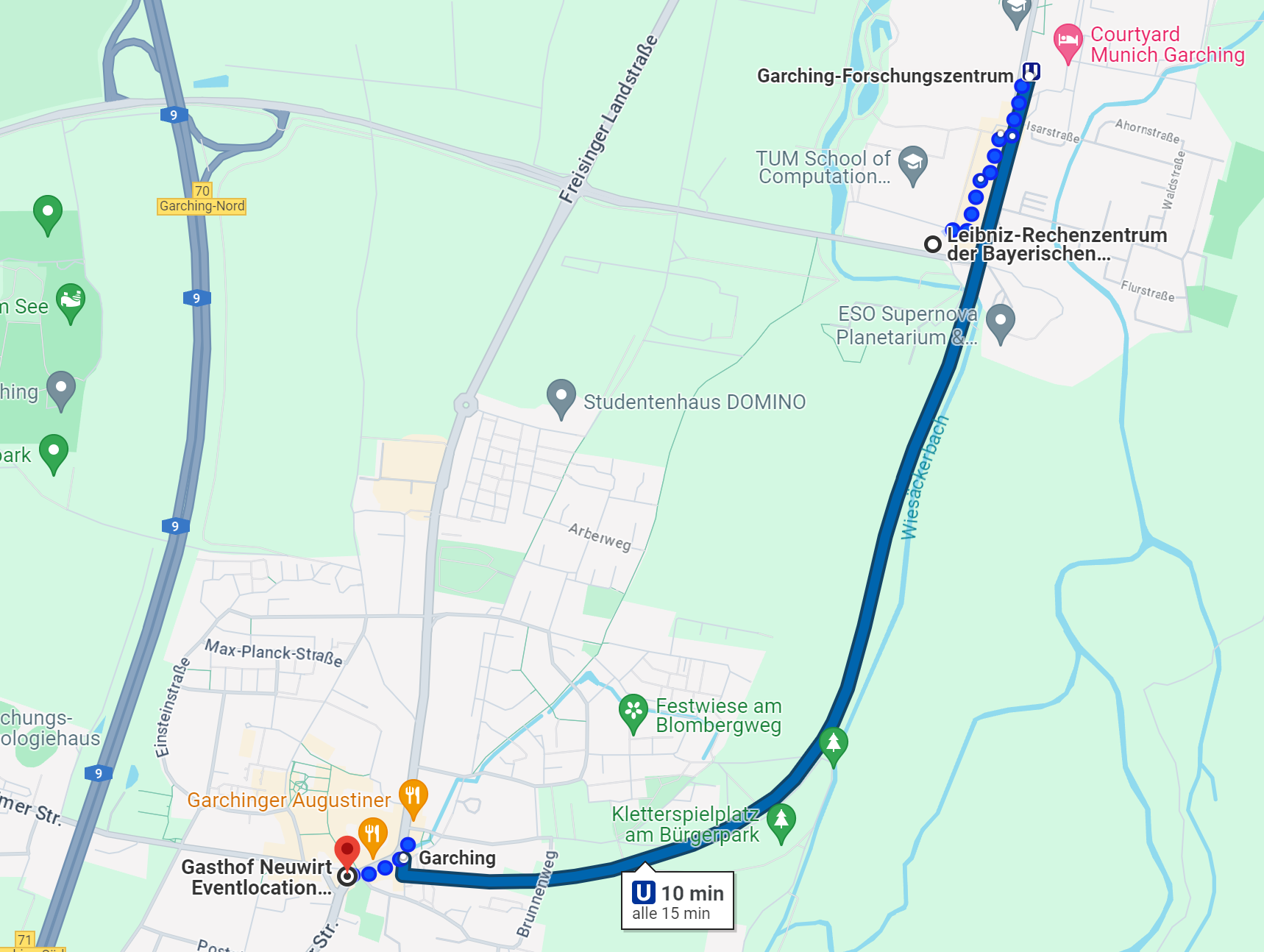

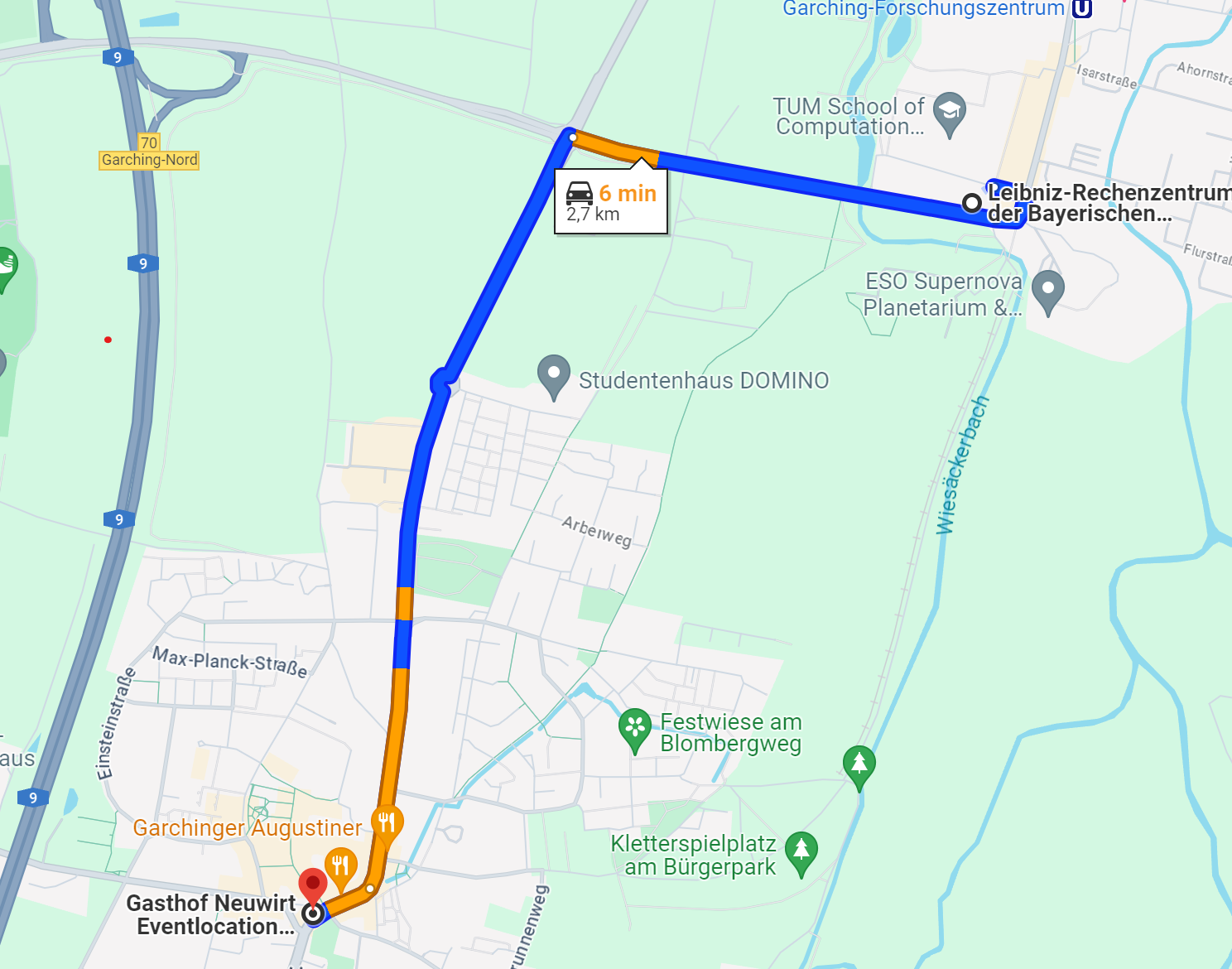

| on foot: 30 minutes walk | by train: 10 minutes 1 station with subway U6 from "Garching Forschungszentrum" to "Garching" The restaurant is next to the subway station. | by car: 6 minutes drive |

|---|---|---|

Please let us know on the first course day if you want to join the guided tour and/or the social event.

Food and Drinks

For coffee and lunch breaks we recommend the bistro, snack/coffee counter and coffee machines of Gastronomie Wilhelm in the TUM School of Computation, Information and Technology (CIT) building just next to the LRZ:

Slides

Day 1

Day 2

Day 3

Day 4

Survey

Please fill out the online survey under

https://survey.lrz.de/index.php/661989?lang=en

This helps us to

- increase the quality of the courses,

- design the future training programme at LRZ and GCS according to your needs and wishes.

Information on the Linux Cluster

https://doku.lrz.de/linux-cluster-10745672.html

The CoolMUC-2 System

https://doku.lrz.de/coolmuc-2-11484376.html

© LRZ

Login to CoolMUC-2

Login under Windows:

- [ Install Terminal Software putty: https://www.chiark.greenend.org.uk/~sgtatham/putty/latest.html ]

- [ Install Xming X11 Server for Windows: https://sourceforge.net/projects/xming/ ]

- Start xming and after that PUTTY

- Enter host name lxlogin1.lrz.de into the putty host field and click Open.

- Accept & save host key [only first time]

- Enter user name and password (provided by LRZ staff) into the opened console.

Login under Mac:

- Install X11 support for MacOS XQuartz: https://www.xquartz.org/

- Open Terminal

- ssh -Y lxlogin1.lrz.de -l username

- Use user name and password (provided by LRZ staff)

Login under Linux:

- Open xterm

- ssh -Y lxlogin1.lrz.de -l username

- Use user name and password (provided by LRZ staff)

How to use the CoolMUC-2 System

Reservation is only valid during the workshop, for general usage on our Linux Cluster remove the "--reservation=hhps1s24"

- Submit a job:

sbatch --reservation=hhps1s24 job.sh - List own jobs:

squeue -M cm2_tiny –u hpckurs?? - Cancel jobs:

scancel -M cm2_tiny jobid - Interactive Access:

module load salloc_conf/cm2_tiny

salloc --nodes=1 --time=02:00:00 --reservation=hhps1s24 --partition=cm2_tiny

or:srun --reservation=hhps1s24 --pty bash

unset I_MPI_HYDRA_BOOTSTRAP

Intel Software Stack:

- The Intel OneAPI software stack is automatically loaded at login.

Intel Fortran and C/C++ compilers comprise both traditional and new, LLVM-based compiler drivers:

- traditional:

ifort, icc, icpc - LLVM-based ("next-gen"):

ifx, icx, icpx, dpcpp

- traditional:

- By default, OpenMP directives in your code are ignored. Use the -qopenmp option to activate OpenMP.

- Use mpiexec -n #tasks to run MPI programs. The compiler wrappers' names follow the usual mpicc, mpif90, mpiCC pattern.

Example OpenMP Batch File

#!/bin/bash

#SBATCH -o /dss/dsshome1/08/hpckurs00/coolmuc.%j.%N.out

#SBATCH -D /dss/dsshome1/08/hpckurs00/

#SBATCH -J coolmuctest

#SBATCH --clusters=cm2_tiny

#SBATCH --nodes=1

#SBATCH --cpus-per-task=28

#SBATCH --get-user-env

#SBATCH --reservation=hhps1s24

#SBATCH --time=02:00:00

module load slurm_setup

export OMP_NUM_THREADS=$SLURM_CPUS_PER_TASK

./myprog.exe

Example MPI Parallel Batch File (2 nodes with 28 MPI tasks per node)

#!/bin/bash

#SBATCH -o /dss/dsshome1/08/hpckurs00/coolmuc.%j.%N.out

#SBATCH -D /dss/dsshome1/08/hpckurs00

#SBATCH -J coolmuctest

#SBATCH --clusters=cm2_tiny

#SBATCH --nodes=2

#SBATCH --ntasks-per-node=28

#SBATCH --get-user-env

#SBATCH --reservation=hhps1s24

#SBATCH --time=02:00:00

module load slurm_setup

mpiexec -n $SLURM_NTASKS ./myprog.exe

Further Batch File Examples

See https://doku.lrz.de/example-parallel-job-scripts-on-the-linux-cluster-10746636.html

Please do not use "#SBATCH --mail-type=…" as this could be considered as a denial of service attack on the LRZ mail hub!

Reservation

$ scontrol -Mcm2_tiny show reservation

ReservationName=hhps1s24 StartTime=2024-06-10T09:00:00 EndTime=2024-06-13T18:00:00 Duration=3-09:00:00

Nodes=i23r01c02s[01-12],i23r01c03s[01-12],i23r01c04s[01-06] NodeCnt=30 CoreCnt=840 Features=(null) PartitionName=(null) Flags=MAINT,OVERLAP,IGNORE_JOBS,SPEC_NODES

TRES=cpu=1680