Currently, Artificial Intelligence (AI) is widely used as decision maker to select actions in real-time and stressful decision problems, thus reducing the information overload, enabling up-to-data information and providing a dynamic response with intelligent agents, enabling communication required for collaborative decisions and dealing with uncertainty. In the medical field, hospitals are using supercomputers and homegrown systems to identify patients who, for example, might be at risk for kidney failure, cardiac disease or postoperative infections, as well as to prevent hospital re-admissions. Furthermore, physicians are starting to combine patient's individual health data with material available in public databases and journals to come up with more personalised treatments.

"Electronic health records are like large quarries where there's lots of gold, and we're just beginning to mine them" Dr. Eric Horvith, managing director of Microsoft Research. [1]

Who's responsible, AI or the doctor?

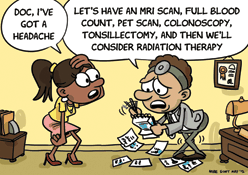

The introduction of AI to medical diagnosis and decision-making has the potential to reduce the number of medical errors and misdiagnoses, as well as allow diagnosis based on physiological relations the physician is unaware they exist. Nevertheless, by shifting pieces of the decision-making process to an algorithm, the increased reliance on AI could complicate potential malpractice claims when doctors pursue the wrong treatment or decision as a result of al algorithm error. Nowadays, the system has the conception of the physician as the trusted expert, and he is entirely responsible for the decisions regarding diagnosis and treatment, and thus responsible if the care provided is negligent or reckless.

However, if the algorithm as a higher accuracy than the average doctor, the blame shouldn't be placed on the physician. The algorithm decision will be statistically the best option, so the doctor couldn't be treated as negligent if he goes on with it, even though it may end up harming a patient. Furthermore, it is unclear whether the medical malpractice law have a protective effect on patients. A study carried out by researchers at Northwestern suggest that strict malpractice liability laws don't necessarily correlate with better outcomes for patients [4].

Nevertheless, the use of AI can also present challenges to core values upheld within the ethical code of medical professionals, such as confidentiality, dignity, continuity or care, avoiding conflict of interest and informed consent. Conflicts of interest may arise when AI providers, developers and sellers don't show all the risks systems in which they have a financial stake have. Continuity of care might be compromised if the AI system behind a decision support tool is kept propietary to the AI company, and health providers can't share or transfer the patient information or predictive analysis. Therefore, it is crucial to set the right of patients to be informed about the benefits and risks of the AI techniques.

Legal Coverage

Artificial Intelligence technology is changing so rapidly and the increasing number of applications make difficult for any state to keep up and meaningfully and timely guide de development of AI systems. Nevertheless, in the EU issues regarding data privacy, ownership, algorithm transparency and accountability have been addressed. The General Data Protection Regulation will effect as law across EU in 2018 and will restrict automated individual decision-making which significantly affects users. It will also create the 'Right of Explanation' by which an user can ask for an explanation of an algorithmic decision made about him. To read the full regulation, please click here.

Strict liability works fine for most consumer products. If a product is defective or makes any harm to the user, the blame is on the company. However, medical algorithms are inherently imperfect. No matter how good the algorithm is, it will occasionally be wrong. Every algorithm will give rise to potential substantial liability some percentage of the time under a strict liability regime. In the pharmaceutical context, due to the fact that it is impossible to make perfect drugs without adverse consequences, just like an algorithm that will always cause error a certain percentage of time. Therefore, most drug product liability regimes rely on claims for 'failure to test or failure to warm', arguing that either the pharmaceutical company didn't perform the necessary testing to establish the safety and efficiency of the drug, or that it neglected to include the warnings regarding possible negative effects on the drug label.

Nevertheless, this 'failure to test or failure to warn' can't be properly translated to AI. The medical algorithms are trained with millions of data, and there are constantly tested and refined to enhance their accuracy. Due to these extensive tests, even during deployment, their exact complications and error rates are perfectly known with high precision, therefore the probability that an error is unknown or undisclosed is very small.

Vaccines share many of the characteristics that make AI algorithms unfit for traditional liabilities for patient harm. They are inherently imperfect and have many well documented side effects. Furthermore, the profit margin is so small that putting liability for harms on the manufacturers would cause many companies to stop the production. The National Vaccine Injury Compensation Programs was created in 2012, and it is a program powered by a small tax on every vaccine administrated and it is used to compensate patients that have been harmed by vaccines, without the need to find liability or assign guilt. Patients just need to report the injury and after a review based on the harm and the vaccine, the government provides a compensation.

This system could be extrapolated to the AI context. Every time an algorithm is used, a small fraction of the cost would go into a fund that would be used to compensated injured patients without blaming either the physician or the company that developed the algorithm.