The advances in technology are accelerating the development of artificial intelligence (AI), i.e. systems that can perform human-like decision-making, show intelligence and use learned skills and expertise. Well-known companies are active in the research and development of innovative AI technology-based products. Examples include robotic surgical equipment, medical diagnosis expert systems (see AI Diagnostic System Overview), but also self-driving cars, complex security systems and automated drones. AI in the field of diagnostics can be very promising, but it is a big step to put the decision-making process in the hands of machines. [1]

Legal Issues

Modern healthcare systems incorporate more and more technology. Emerging with these technologies is an ever-increasing public concern where decisions are made by computers and not by humans. Ethics, safety, and regulatory concerns are present due to the rapid growth of AI technologies. Drafting legislation is a tough task in this context, as AI innovation should not be hampered, but public should be protected from possible dangers when computer judgment replaces that of humans.

There has been extensive litigation over the safety of surgical robots, especially the “da Vinci” robot. 1984, the court in the US stated that “robots cannot be sued” and discussed instead how the manufacturer of a defective robotic pitching machine is liable for civil penalties for the machine's defects. But it is important to note that since then, AI technologies and autonomous machines have evolved rapidly and have become far more sophisticated. [1]

AI Diagnostics - Who is responsible?

A misdiagnosis would usually be the responsibility of the presiding physician. If a defective machine harms a patient, the manufacturer or operator would likely be responsible. But what does this mean for AI?

Nowadays physicians using an AI are expected to use it as assistance for decision-making, not as replacement. In this sense, the doctor is still responsible for errors that may occur. However, due to the opacity of AI systems, it cannot be assured that the doctors will actually be able to fully understand the consequences and assess the reliability of information obtained from an AI. It’s impossible to understand why an AI has made a certain decision, solely that the decision was based on the information it was fed with. Even if it were possible for a physician to examine such a machine-learning algorithm, it would likely be forbidden as they are often treated as protected proprietary information. This pending issue becomes even more urgent as doctors more and more rely on AI and algorithms' results are questioned less and less.

So, if a doctor can't be held fully responsible, who else can be?

- The AI system itself

If the AI system itself is held responsible for its wrong decision, actually noone is punished, because it's not possible to get compensation from a software. Patients are left unsatisfied and the unanswerable philosophical question of the intelligence of machines arises. This would also mean we consider the machine a legal entity, which arises even more questions. - The designers of the AI

Holding the designers of the AI responsible usually means making a team of hundreds responsible and so it's difficult to break this down to individual responsibility. Also the AI might later be used for something different, what carries weight due to the temporal distance between research, design and implementation. An example for this is IBM's Watson, which was initially designed for a quiz show, but is now used as a medical tool. - The organization

Blaming the organization would mean providing a clear target for retribution. But this approach also has its problems: In the absence of design failures or other misconduct, it is difficult to hold the organization responsible for how others have used its product. Additionally, making the organization responsible would make it less attractive to invest in this field. [2]

Can an artificial intelligence have intuition?

Intuition or gut feeling is created in the right hemisphere, more specifically speaking it is said to be located in the cerebellum, the ventromedial prefrontal cortex and the locus ceruleus, a nucleus located in the pons. There is evidence, that intuition is a domain-specific ability and it gets better with practice. For example, if a doctor has been diagnosing and treating patients several decades, it is said to be safe to go with his gut feeling about a certain sickness. It has nothing to do with a human's stomach, it's more like the brain takes a short-path, the consciousness is not aware of. [9]

This sounds like intuition is learnable. So, can an artificial intelligence also have intuition?

In fact, there is an attempt to incorporate intuition in AI. This approach is called Artificial Intuition or Intuition-based learning (IBL).

To name one example, the Data Science Machine of the Massachusetts Institute of Technology (MIT) outperformed 615 of 906 human teams in 3 tests that usually require some human intuition. The predictions made by the Data Science Machine were 94%, 96% and 87% as accurate as the winning submissions, but took only 2 to 12 hours, while the human teams worked on their prediction algorithms for months. The tests consisted of big-data analysis which requires searching for buried patterns that have some kind of predictive power. Choosing which “features” of the data to analyze, is the "intuitive" part here. [10] One can discuss, if this is already intuition...

Technology takes big steps forward, and so in future it might replicate even human intuition in some parts. But some parts of gut feeling might never be revealed. For example, if a doctor has gut feeling that the patient is lying about his medical condition or about taking any other medication or not taking his medication, without monitoring the patient, it is unlikely that AI can develop such "feeling".

Bibliography

1) Quinn Emanuel trial lawyers, Article: Artificial Intelligence Litigation: Can the Law Keep Pace with The Rise of the Machines? http://www.quinnemanuel.com/the-firm/news-events/article-december-2016-artificial-intelligence-litigation-can-the-law-keep-pace-with-the-rise-of-the-machines/ (access 15/07/17)

2) Hart R. (May 2017) When artificial intelligence botches your medical diagnosis, who’s to blame?, https://qz.com/989137/when-a-robot-ai-doctor-misdiagnoses-you-whos-to-blame/, (access 16/07/17)

3) IBM Think Academy (2015) How It Works: IBM Watson Health, https://www.youtube.com/watch?time_continue=4&v=ZPXCF5e1_HI (access 15/07/17)

4) Goodman J. (2015) Why we have to get smart about artificial intelligence, https://www.theguardian.com/media-network/2015/jan/26/artificial-intelligence-developments-risks-law (access 15/07/17)

5) Cancer treatment centers of America, Laughter therapy, http://www.cancercenter.com/treatments/laughter-therapy/ (access 15/07/17)

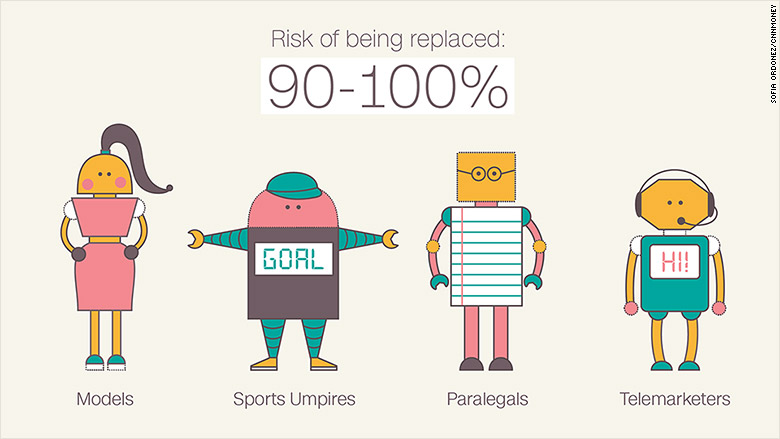

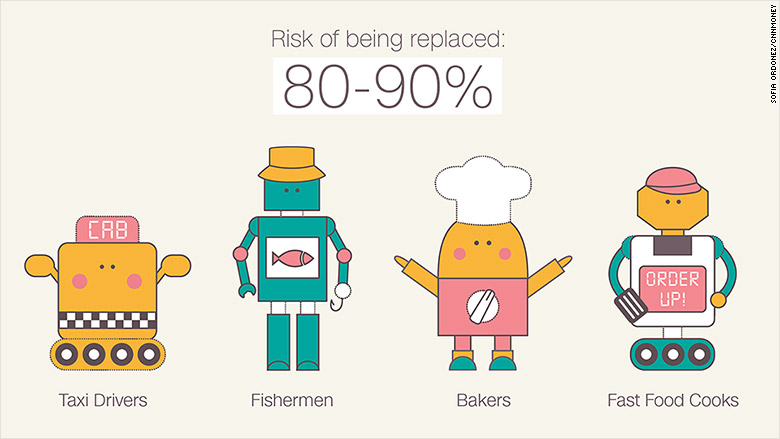

6) CNN Money, http://i2.cdn.turner.com/money/dam/assets/160113120227-job-robots-1-780x439.jpg (access 15/07/17)

7) CNN Money, http://i2.cdn.turner.com/money/dam/assets/160113120741-job-robots-2-780x439.jpg (access 15/07/17)

8) CNN Money, http://i2.cdn.turner.com/money/dam/assets/160113120848-job-robots-3-780x439.jpg (access 15/07/17)

9) Examined Existence, Intuition, Gut Feeling, and the Brain: Understanding Our Intuition, https://examinedexistence.com/intuition-gut-feeling-and-the-brain-understanding-our-intuition/ (access 15/07/17)

10) Massachusetts Institute of Technology (2015) Automating big-data analysis, http://news.mit.edu/2015/automating-big-data-analysis-1016 (access 15/07/17)