This blog post summarizes and reviews the paper "Autonomic Robotic Ultrasound Imaging System Based on Reinforcement Learning" by Guochen Ning, Xinran Zhang and Hongen Liao [1]

The Big Picture

Motivation and Challenges

Ultrasound (US) is a widely-used modality in medical imaging, as it is non-invasive, fast, cheap and portable. Compared to other imaging methods, however, it requires the manual guidance of the US probe and therefore heavily relies on the user’s proficiency.

Especially in prolonged scans, due to its superior repeatability and stability, an autonomic robotic system is a welcome aid for physicians and capable of obtaining high-quality images even in absence of a trained ultrasonographer [2]. High-quality images require a constant contact force and precise positioning of the probe on the deformable surface. Motion due to breathing and heartbeat must be compensated. Occlusions and hits have to be dealt with confidently and the target inside the body has to be found on-time.

Related Work

Most methods implemented so far use expensive 3D acquisition equipment to virtually reconstruct the skin surface. The US probe is navigated by complex path planning algorithms [3,4]. These methods suffer from the standard occlusion problem and their accuracy is bounded by the limited accuracy of the 3D equipment.

State-of-the-art mechanical structures and control methods are not capable of universally correcting errors caused by non-regular motions of the patient [5]. As traditional control methods require detailed knowledge about the environment, various techniques like fuzzy control [6] or reinforcement learning (RL) [7] are used to adjust the stiffness of the robot without considering prior information about the target. In deep reinforcement learning (DRL), compared to standard RL, the states are not handcrafted. A deep neural network (NN) is used to directly take high-dimensional sensor input and produce useful states. Due to the avoidance of user-defined states, reliability is increased [8] and complex robot controllers are successfully learned [9].

Methodology

The proposed system can be divided into three parts:

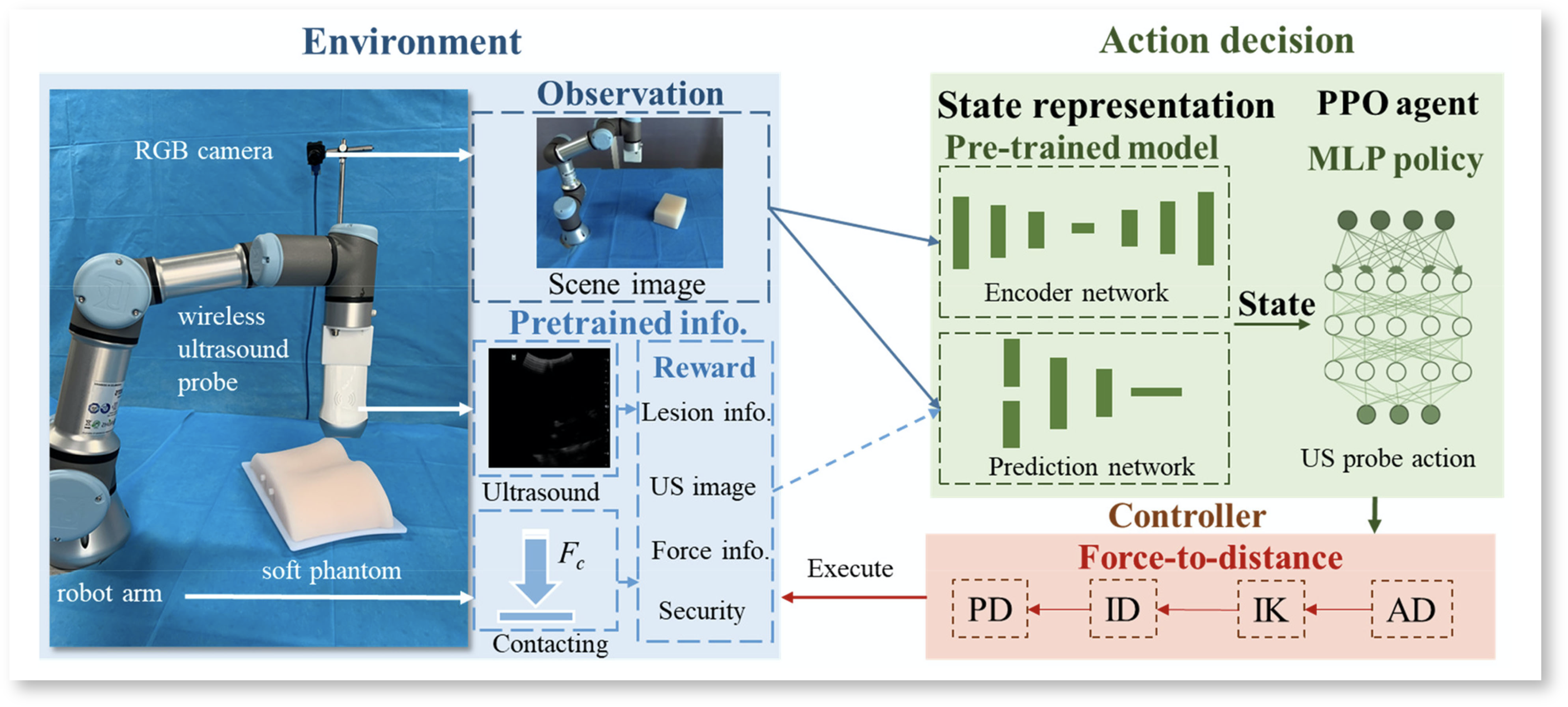

Fig. 1 Framework of the autonomic robotic ultrasound imaging system [1]

Environment

An US probe with an integrated force sensor is attached to a light-weight robot. Opposed to previous systems, no 3D acquisition equipment but only a RGB camera is used here. To train the RL agent, a rewardR_tis computed.

R_t = R_{distance} + R_{US} + R_{lesion} + R_{maintain}

R_{distance}represents the euclidean distance between the probe and target in order to encourage the robot to approach the target.R_{US}has a constant positive value if an US image is obtained, and is zero otherwise.R_{lesion}compares the current US image to a template of the physiological target structure.R_{maintain}is a running addition of previousR_{US} to aim for long uninterrupted scanning episodes. For safety reasons, a scanning episode ends, if a force value over a defined treshold was detected, or if the boarders of the workspace were reached.

Action decision

Firstly, a low-dimensional state vectorS_tis computed by the state representation (SR) model illustrated below:

Fig. 2 Framework of the state representation model [1], blue areas were added by L. Meier

The 256 x 256 x 3 RGB imageI_tis compressed to the image state vector S_t^Iby a convolutional auto encoder (CAE). The same architecture with differently trained weights, encodesI_tand the next imageI_{t+1}in the reward statesS^R_tandS^R_{t+1}. The stateS_tis the concatenation of the image and reward state.

Note, that after training, the parts highlighted in blue are no longer considered. The probe is guided by observing the processed RGB image only.

Finally, a multi-layer perceptron (MLP) is used to implement the RL agent, who determines the robot’s next actionA_tgiven the stateS_t. The weights of the MLP represent the policy\pi(a|s), which was learned using proximal policy optimization (PPO).

Controller

The overall goal of the controller is to keep the contact force between the US probe and skin constant, while moving the probe towards the target. Therefore the output of the RL agent is the desired contact force in three directions.

The components in x-, y-direction encourage the probe to move to the target, while the z-component regulates the skin-contact.

Impedance control, is a popular method in human-robot-interaction and could be applied directly, because it is controlling a force. But for deformable targets, as we have here, admittance control is known to be the better choice [10]. It is the reverse method to impedance control and thus working with a desired position.

The contribution in this paper is to integrate an admittance controller to the force action-space. The authors call this the force-to-displacement (F2D) method.

Experiments and Results

Ablation Study

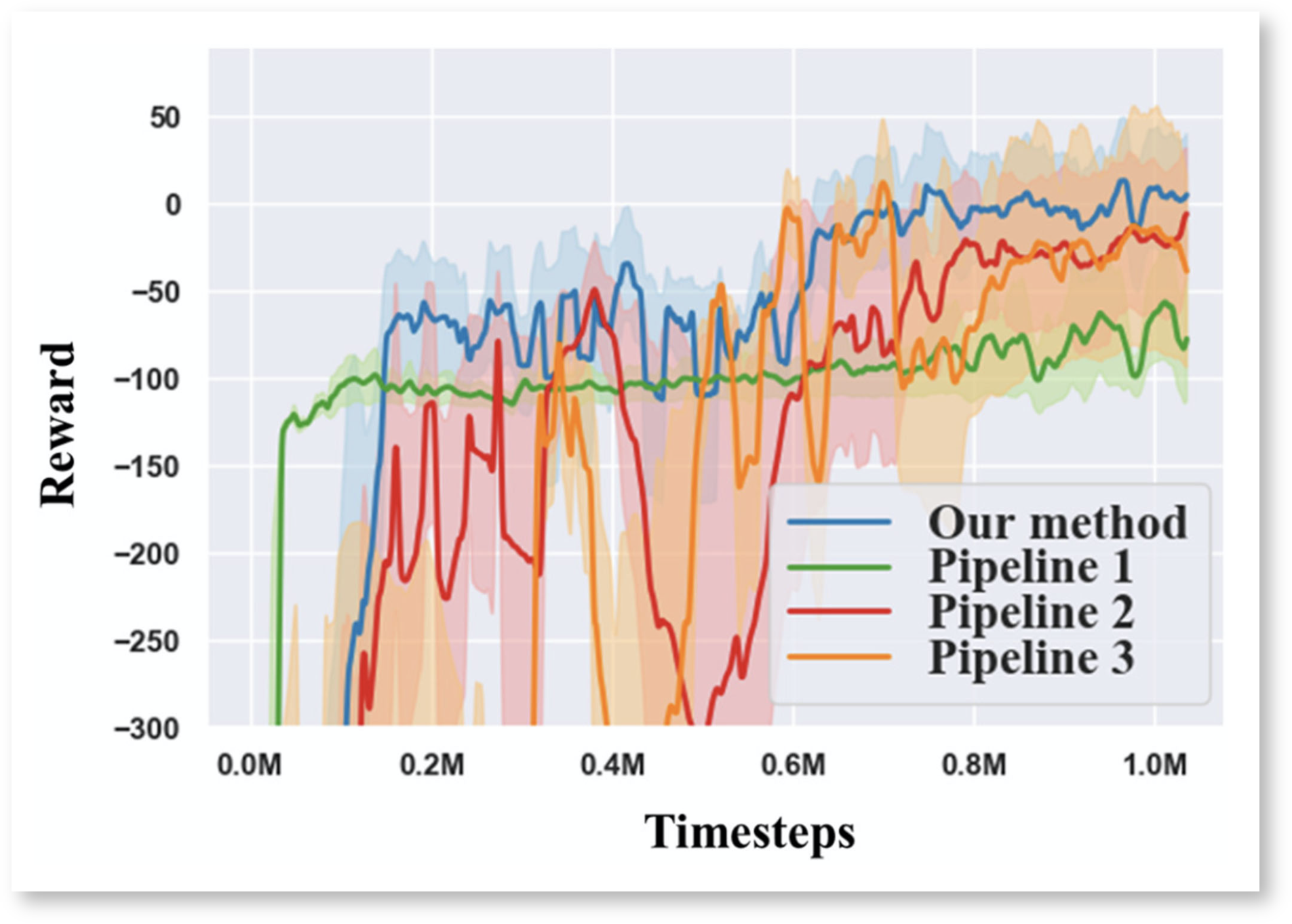

To evaluate the influence of the SR model on the system’s overall performance, three modified pipelines were tested against the proposed one. All systems were trained in a simulator for 1 million steps using the same hyperparameters.

Fig. 3 Learning curves during the training in the simulator Tab. 1 Success rates using different pipelines in the simulation experiment

Only the proposed pipeline, which uses the SR model for multiform information encoding and observes a single processed RGB image only, achieved a positive reward by the end of the training.

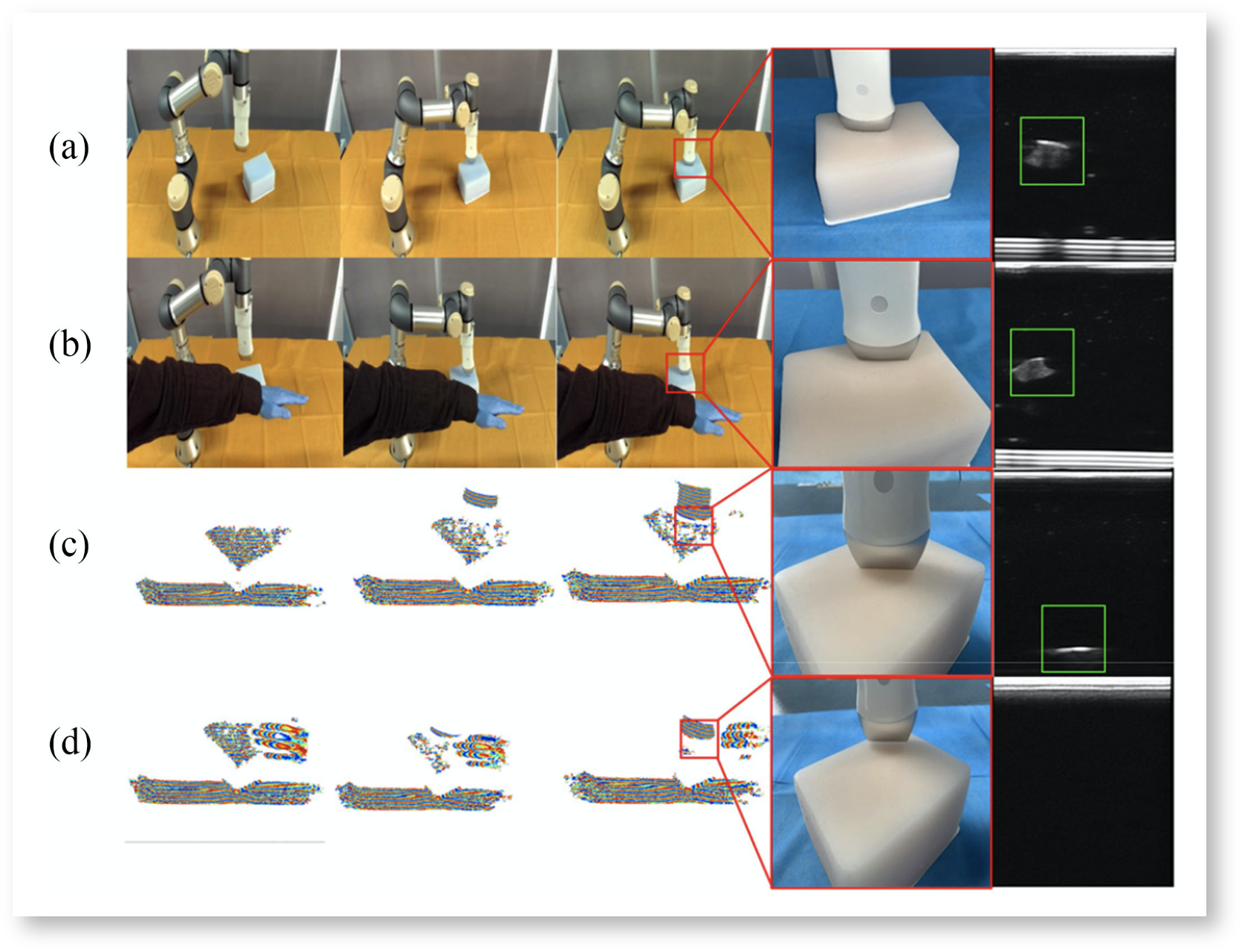

Autonomous US Imaging Feasibility Study

Firstly, the proposed system was compared to a conventional reconstruction method. Undisturbed operation (Fig 4: a,c) was compared to an environment interfered by a forearm (b,d). Three differently shaped and positioned phantoms were used. A scan was considered successful, if a stable US image was obtained before the end of an episode.

In a second experiment, the robot was moved by hand to disturb the scanning process.

Fig. 4 Comparison of proposed method (a, b) to conventional method (c, d). Tab. 2 Static = phantom static, Moved = robot moved, I = Interfered by occlusion,

N35 = surface reconstruction method

As shown in Tab. 2, the state-of-the-art surface reconstruction method showed acceptable performance only for a static target in an undisturbed environment. The proposed method was more successful in all test cases.

F2D Control Experiment

Firstly, to evaluate the effectiveness and feasibility of the F2D method, it was compared to a standard impedance controller, which works in the displacement-to-force way. Fig. 5 shows the learning curve of both controllers during training on the hardware system and a soft slippery phantom.

Fig. 5 The system’s learning curves using F2D or standard impedance control

The F2D method was able to learn the mapping between force and displacement better than standard impedance control and thus achieved positive rewards.

Secondly, for quantitative evaluation of the F2D method the force sensor values were recorded. The phantom was moved in x, y-direction in the time interval 300 - 350 ms. The robot was interrupted by hand at 350 ms.

Fig. 6 Contact force in all three directions while moving the phantom horizontally and interrupting the robot by hand

While the target moved horizontally, the contact force in z-direction remained constant. After the interruption, the probe was controlled back safely.

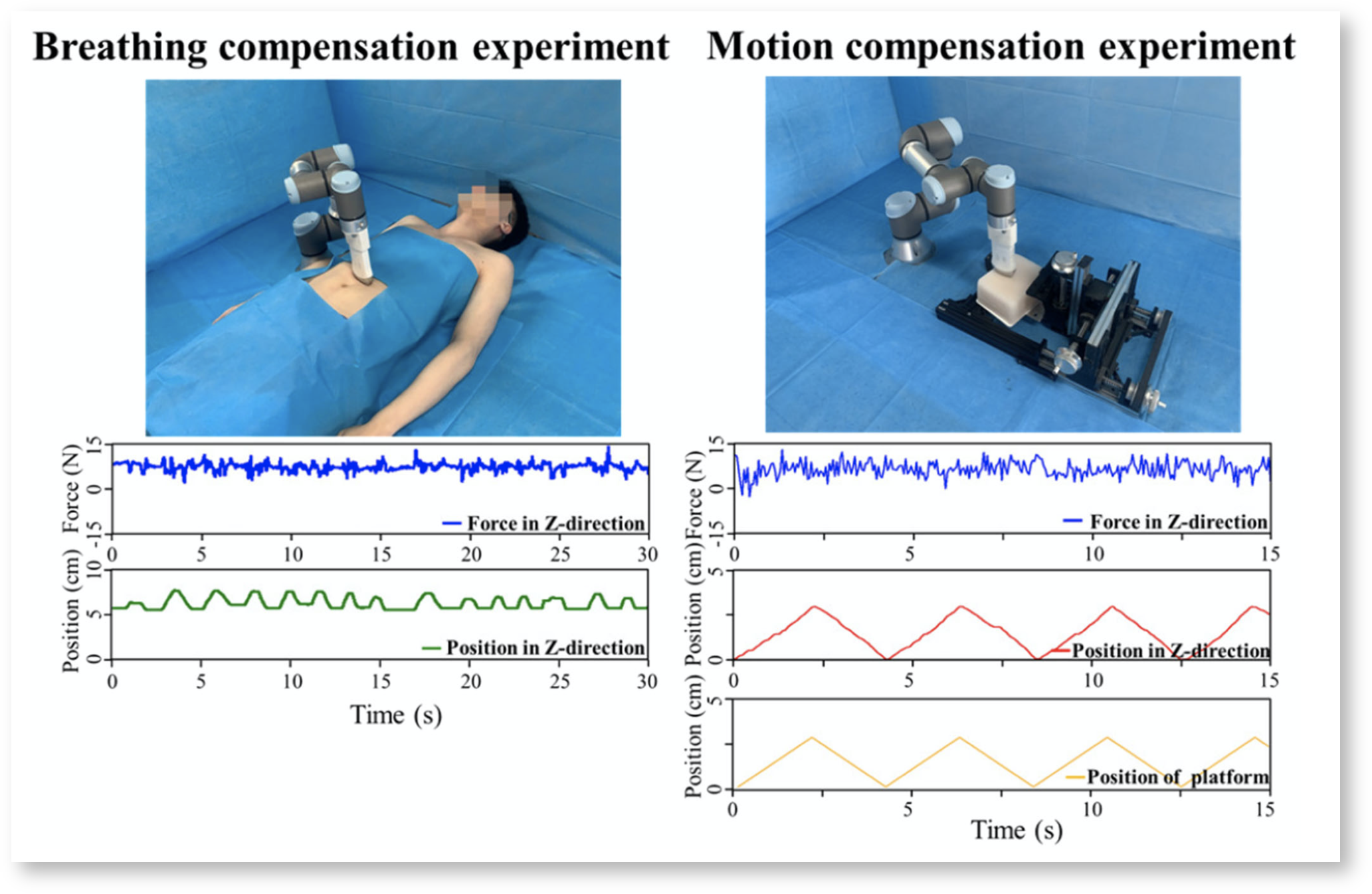

Volunteer Experiments

Motion compensation

To qualitatively evaluate the respiratory motion compensation, the abdominal area of human volunteers was imaged. The contact force in z-direction was monitored, while the subjects were taking deep breaths.

For quantitative evaluation, the phantom was put on a lifting platform.

Fig. 7 Qualitative (on human) and quantitative (on phantom) motion compensation evaluation

Although the force in z-direction was slightly fluctuating due to in- and exhalation, it did not exceed the value range suited for US imaging.

Automatic US Imaging

A preoprative image of a volunteer's spine was set as template for the calculation of R_{lesion} while training the SR model. The RL agent was not further trained for the human target, because the phantom already had a spine-like structure inside.

Fig. 8 Preoperative template and US images obtained by the autonomic system, landmarks highlighted with green dots

Satisfactory US images were obtained, that allow clear identification of structural landmarks. Thus the system was capable of putting the probe in the correct position and applying a suitable contact force.

Student's Review

Strength and Weakness

The most impressive contribution of this paper is encoding invisible information into a visible image. Also, I see a big advantage in avoiding 3D acquisition, as it is expensive and requiring tedious registration procedures.

On the other hand, observing the RGB image only is a waste of information. A doctor would never guide an US probe from looking at the patient only, but from the real-time US image on the screen. How robust will this method be for a modified position of the RGB camera or a disturbed background? I think, this paper has to be understood as a feasibility study and not as the proposal of a system to be transferred to the clinic it that way.

Due to the RL approach, no dataset is required. The policy is learned from self-exploration. Especially in the medical field, the problem of data availability is well-known. As patient-specific preoperative data is not necessary, the system is ready-to-use.

The structure of the methods part raises some confusion. I wished there was a clear distinction between the system in training and in use. In Fig. 2 it is not obvious, that during operation only a small portion of the illustrated components are kept. Therefore I added the blue shadings for this blog post. The control method is introduced overly complicated. At the same time I am missing the derivation of the F2D method and what exactly the contribution of the authors is against the state-of-the-art.

Suggestions for Future Work

- Transfer from spine to further anatomical sites

Integration of real-time US information into action-decision. The feasibility of US image guided [11] and force guided [12] navigation was already shown.

References

[1] Guochen Ning, Xinran Zhang, und Hongen Liao, "Autonomic Robotic Ultrasound Imaging System Based on Reinforcement Learning“, IEEE Trans. Biomed. Eng., vol. 68, no. 9, pp. 2787–2797, 2021.

[2] Alan M. Priester, Shyam Natarajan, and Martin O. Culjat, “Robotic ultrasound systems in medicine”, IEEE Trans. Ultrason., Ferroelect., Freq. Control, vol. 60, no. 3, pp. 507–523, 2013.

[3] Qinghua Huang, Jiulong Lan, and Xuelong Li, “Robotic arm based automatic ultrasound scanning for three-dimensional imaging”, IEEE Trans. Ind. Informat., vol. 15, no. 2, pp. 1173–1182, 2019.

[4] Samir Merouche, Louise Allard, Emmanuel Montagnon, Gilles Soulez, Pascal Bigras, Guy Cloutier “A robotic ultrasound scanner for automatic vessel tracking and three-dimensional reconstruction of B-mode images”, IEEE Trans. Ultrason. Ferroelect., vol. 63, no. 1, pp. 35–46, 2016.

[5] Yudai Sasaki, Fumio Eura, Kento Kobayashi, Ryosuke Kondo, Kyohei Tomita, Yu Nishiyama, Hiroyuki Tsukihara, Naomi Matsumoto, Norihiro Koizumi, “Development of compact portable ultrasound robot for home healthcare”, The Journal of Engineering. 2019, no. 14, pp. 495–499.

[6] Barmak Baigzadehnoe, Zahra Rahmani, Alireza Khosravi, Behrooz Rezaie, “On position/force tracking control problem of cooperative robot manipulators using adaptive fuzzy backstepping approach”, ISA Trans., vol. 70, pp. 432–446, 2017.

[7] Roberto Martín-Martín, Michelle A. Lee, Rachel Gardner, Silvio Savarese, Jeanette Bohg, Animesh Garg, “Variable impedance control in end-effector space: An action space for reinforcement learning in contact-rich tasks”, in Proc. IEEE/RSJ Int. Conf. Intell. Robots Syst., Macau, China, 2019, pp. 1010–1017.

[8] Volodymyr, Koray Kavukcuoglu, David Silver, Andrei A. Rusu, Joel Veness, Marc G. Bellemare, Alex Graves, Martin Riedmiller, Andreas K. Fidjeland, Georg Ostrovski, Sting Petersen, Charles Beattie, Amir Adik, Ioannis Antonoglou, Helen King, Dharshan Kumaran, Dean Wierstra, Shane Legg, Demis Assays, “Human-level control through deep reinforcement learning”, Nature, vol. 518, no. 7540, pp. 529–533, 2015.

[9] Xiaofan Yu, Runze Yu, Jingsong Yang, Xiaohui Duan, “A robotic auto-focus system based on deep reinforcement learning”, in Proc. 15th Int. Conf. Control, Automat., Robot. Vis., 2018, pp. 204–209.

[10] Hninn Wai Wai Hlaing, Aung Myo Thant Sin, Theingi"Admittance Controller for Physical Human-Robot Interaction Using One-DOF Assist Device", International Journal of Scientific & Engineering Research, vol. 8, no. 12, 2017.

[11] Keyu Li, Yangxin Xu, Jian Wang, Dong Ni, Li Liu, Max Q.-H. Meng, "Image-Guided Navigation of a Robotic Ultrasound Probe for Autonomous Spinal Sonography Using a Shadow-aware Dual-Agent Framework" arXiv preprint arXiv:2111.02167, 2021.

[12] Maria Tirindelli, Maria Victoria, Javier Esteban, Seeing Tae Kim, David Navarro-Alarcon, Yong Ping Zheng, Nassir Navab , "Force-Ultrasound Fusion: Bringing Spine Robotic-US to the Next “level”", IEEE Robotics and Automation Letters, vol. 5, no. 4, pp. 5661-5668, 2020.