ANSYS HFSS

ANSYS HFSS software product is aimed for low/high frequency electro-magnetic wave propagation simulations. This software was formerly acquired fro the company ANSOFT and is part of the ANSYS software portfolio since 2008. ANSYS HFSS is a commercial finite element method solver for electromagnetic structures from ANSYS Inc.. The acronym HFSS stands for high-frequency structure simulator. ANSYS HFSS is nowadays a 3D electromagnetic (EM) simulation software for designing and simulating high-frequency electronic products such as antennas, antenna arrays, RF or microwave components, high-speed interconnects, filters, connectors, IC packages and printed circuit boards. Engineers worldwide use ANSYS HFSS to design high-frequency, high-speed electronics found in communications systems, radar systems, advanced driver assistance systems (ADAS), satellites, internet-of-things (IoT) products and other high-speed RF and digital devices.

Further information about ANSYS HFSS, licensing of the ANSYS software and related terms of software usage at LRZ, the ANSYS mailing list, access to the ANSYS software documentation and LRZ user support can be found on the main ANSYS documentation page.

Licensing

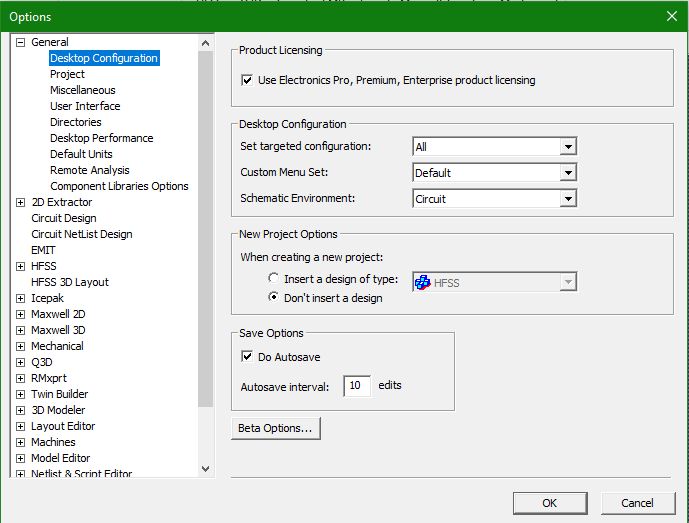

In order to activate for the ANSYS Electronics Products (ANSYS Electronics Desktop - AEDT, Maxwell-2d/3d, HFSS,...) the new ANSYS 2021.R1 licensing scheme, please do the following:

- Open the ANSYS Electronics Desktop (AEDT)

- Open the Options Menue

- Under the item "Options --> General --> Desktop Configuration" please set the check mark for

"Use Electronics Pro, Premium, Enterprise product licensing" - This option activates the new 2021.R1 license feature increments for the ANSYS electronic products.

Afterwards this Options Menue should look like in the following image:

Possible Support on LRZ HPC Systems for ANSYS Electronics Software

With ANSYS Release 2019.R3 it was made an attempt, to make ANSYS Electronics Software available on LRZ HPC systems, i.e. Linux Clusters CMUC2/3.

Unfortunately the ANSYs Electronics Software is entirely relying on the ANSYS proprietory scheduler ANSYS Remote Solver manager (RSM), which is not compatible with the scheduler SLURM being utilized on LRZ HPC systems. With the direct help from ANSYS developers it was successful for ANSYS Release 2019.R3 to get electronics software solvers like Maxwell-2d/3d and HFSS running on LRZ HPC systems. But this required support from the ANSYS developer team was no longer provided for later releases of the ANSYS software.

Therefore ANSYS Electronics software in Releases later then version 2019.R3 are currently known to run only on local laptops/workstations (mainly under Windows operating system or on Linux with a locally provided ANSYS RSM scheduler). In case of more specific questions on ANSYS Electronics software on LRZ HPC systems, please send your querry to LRZ Support.

Getting Started

Once you are logged into one of the LRZ cluster systems, you can check the availability (i.e. installation) of ANSYS HFSS software by checking availability of a corresponding ANSYS Electronic Desktop module:

> module avail ansysedt

Load the prefered ANSYS Electronic Desktop (ansysedt) version environment module, e.g.:

> module load ansysedt/2019.R3

One can use ANSYS Electronic Desktop with all its integrated simulation approaches in interactive GUI mode for the only purpose of pre- and/or postprocessing on the Login Nodes (Linux: SSH Option "-Y" or X11-Forwarding; Windows: using PuTTY and XMing for X11-forwarding). This interactive usage is mainly intended for making quick simulation setup changes, which require GUI access. And since ANSYS Electronics Desktop is loading the mesh into the login nodes memory, this approach is only applicable to comparable small cases. It is NOT permitted to run computationally intensive ANSYS HFSS simulation runs or postprocessing sessions with large memory consumption on Login Nodes. The formerly existing Remote Visualization systems have been switched off in March 2024 without replacement due to their end-of-life. Any work with the ANSYS software being related to ANSYS Electronics Desktop, interactive mesh generation as well as graphically intensive pre- and postprocessing tasks need to be carried out on local computer systems and by using an ANSYS Academic Research license, which is available from LRZ for an annual license fee.

ANSYS HFSS Parallel Execution (Batch Mode)

It is not permitted to run computationally intensive ANSYS HFSS simulations on front-end Login Nodes in order not to disturb other LRZ users. However, the ANSYS HFSS simulations can be run on the LRZ Linux Clusters or even on SuperMUC-NG in batch mode. This is accomplished by packaging the intended ANSYS HFSS simulation run in a corresponding SLURM script, as it is provided here in the following example.

All parallel ANSYS HFSS simulations on LRZ Linux Clusters and SuperMUC-NG are submitted as non-interactive batch jobs to the appropriate scheduling system (SLURM) into the different pre-defined parallel execution queues. Further information about the batch queuing systems and the queue definitions, capabilities and limitations can be found on the documentation pages of the corresponding HPC system (LinuxCluster, SuperMUC-NG). By default ANSYS is supporting only commercial schedulers (e.g. LSF, PBS-Pro, SGE, ANSYS RSM) for the parallel simulation of ANSYS EM products. But more recently ANSYS EM is providing a first beta-state implementation of scheduler support for SLURM (called: ANSYS EM Tight Integration for SLURM). Based on this still a bit "experimental" SLURM scheduler support, the following parallel execution capability is provided for the LRZ cluster systems.

For job submission to a batch queuing system a corresponding small shell script needs to be provided, which contains:

- Batch queueing system specific commands for the job resource definition

- Module command to load the ANSYS Electronics Desktop (ansysedt) environment module

- A batch configuration file for the intended ANSYS HFSS simulation

- Start command for parallel execution of ansysedt with all appropriate command line parameters

The configuration of the parallel cluster partition (list of node names and corresponding number of cores) is provided to the ansysedt command from the batch queuing system (SLURM) by the provision of specific environment variables.

Furthermore we recommend to LRZ cluster users to write for longer simulation runs regular backup files, which can be used as the basis for a job restart in case of machine or job failure. A good practice for a 48 hour ANSYS HFSS simulation (max. time limit) would be to write backup files every 6 or 12 hours. Please plan for the setting of wall clock time limit enough time buffer for the writing of output and results files, which can be a time consuming task depending on your application.

ANSYS HFSS Job Submission on LRZ Linux Clusters under SLES12 using SLURM

ANSYS EM solutions are not supported on CM2 Linux Cluster, since SLES15 operating system is a not supported OS.

In the following an example of a job submission batch script for ANSYS HFSS on CoolMUC-3 (SLURM queue = mpp3) in the batch queuing system SLURM is provided.

Also at this time ANSYS 2020.R1 has been set as the default version, the ANSYS EM Tight Integration for the SLURM scheduler is currently only available for ANSYS EM Version 2019.R3.

Assumed that the above SLURM script has been saved under the filename "hfss_mpp3_slurm.sh", the SLURM batch job has to be submitted by issuing the following command on one of the Linux Cluster login nodes:

sbatch hfss_mpp3_slurm.sh

Warning: Do NOT use additionally mpirun, mpiexec or any srun command to start the parallel processes. This is done by a MPI wrapper by the ansysedt startup script in the background. Also, do not try to change the default Intel MPI to any other MPI version to run ANSYS HFSS in parallel. On the LRZ cluster systems only the usage of Intel MPI is supported and known to work propperly with ANSYS HFSS.